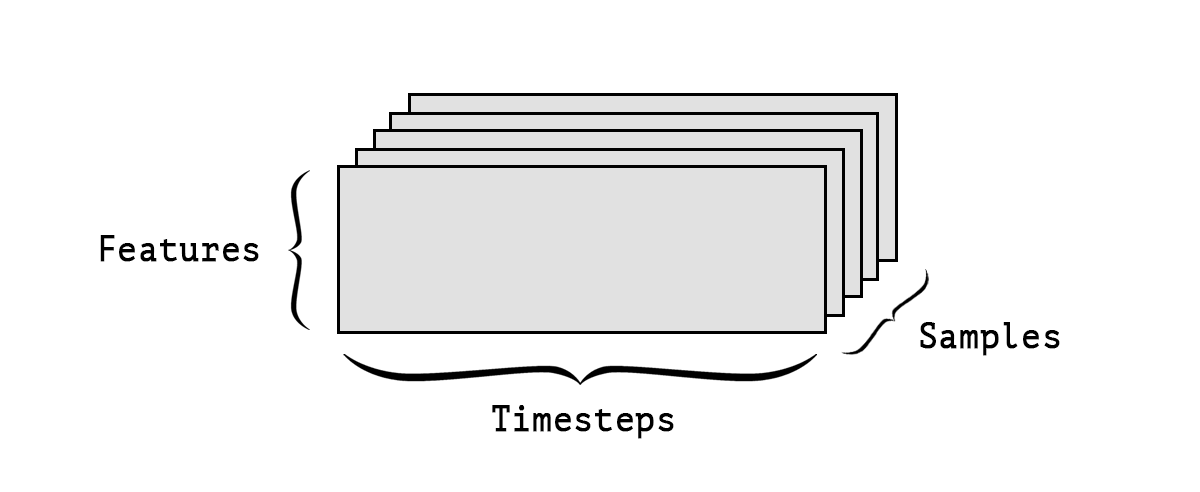

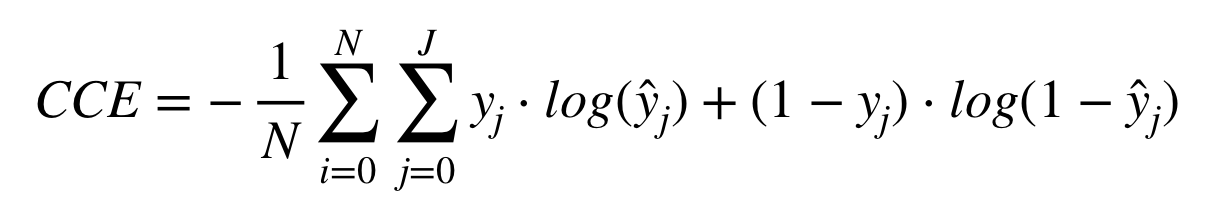

class: center, middle, inverse, title-slide # Deep Learning using R ### Curso-R ### 2018-11-12 --- # Goals * What are deep neural networks and how they work? * What software we can use to train these models and how they relate with each other? * How train deep learning models for some prediction problems? --- # Requisites * Linear regression * Logistic regression * R: pipe (`%>%`) --- # References * [Deep Learning Book](https://www.deeplearningbook.org) * [Deep Learning with R](https://www.manning.com/books/deep-learning-with-r) * [Tensorflow for R Blog](https://blogs.rstudio.com/tensorflow/) * [Keras examples](https://keras.rstudio.com/articles/examples/index.html) * [Colah's blog](http://colah.github.io)  --- # Why "Deep" Learning? * We use many composite nonlinear operations, called *layers*, to learn a representation * The number of layers is the model depth * Nowadays we have models with more than 100 layers -- ## Alternative names - layered representations learning - hierarchical representations learning --- # Layers  --- # Deep Learning  --- # Deep Learning  --- # Deep Learning  --- # Relation to Generalized Linear Models - Linear regression: single layer neural network, no activation - Logistic regression: single layer neural netork, logit activation --- # Logistic regression <img src="imgs/glm.png" width="968" /> --- ## Deviance function `$$D(y,\hat\mu(x)) = \sum_{i=1}^n 2\left[y_i\log\frac{y_i}{\hat\mu_i(x_i)} + (1-y_i)\log\left(\frac{1-y_i}{1-\hat\mu_i(x_i)}\right)\right]$$` `$$= 2 D_{KL}\left(y||\hat\mu(x)\right),$$` where `\(D_{KL}(p||q) = \sum_i p_i\log\frac{p_i}{q_i}\)` is the Kullback-Leibler divergence. --- ## Deep learning <img src="imgs/y1.png" width="100%" /> - Linear transformation of `\(x\)`, add bias and add some nonlinear activation. `$$f(x) = \sigma(wx + b)$$` --- ## Loss function `$$D_{KL}(p(x)||q(x))$$` -- <img src="imgs/thinking.png" width="20%" style="display: block; margin: auto;" /> --- # Optimization: Stochastic Gradient Descent ``` for(i in 1:num_epochs) { grads <- compute_gradient(data, params) params <- params - learning_rate * grads } ``` --- # SGD <img src="https://user-images.githubusercontent.com/4706822/48280375-870fdd00-e43a-11e8-868d-c5afa9e7c257.png" style="width: 90%"> <img src="https://user-images.githubusercontent.com/4706822/48280383-8d05be00-e43a-11e8-96e8-7f55b697ef6f.png" style="width: 90%"> --- ## TensorFlow It's a computational library - Developed in Google Brain for neural network research - Open Source - Automatic Differentiation - Uses GPU  --- ## Tensor (2d) ``` ## Sepal.Length Sepal.Width Petal.Length Petal.Width Species ## [1,] 5.1 3.5 1.4 0.2 1 ## [2,] 4.9 3.0 1.4 0.2 1 ## [3,] 4.7 3.2 1.3 0.2 1 ## [4,] 4.6 3.1 1.5 0.2 1 ## [5,] 5.0 3.6 1.4 0.2 1 ## [6,] 5.4 3.9 1.7 0.4 1 ## [7,] 4.6 3.4 1.4 0.3 1 ## [8,] 5.0 3.4 1.5 0.2 1 ## [9,] 4.4 2.9 1.4 0.2 1 ## [10,] 4.9 3.1 1.5 0.1 1 ``` --- ## Tensor (3d)  --- ## Tensor (4d) <img src="https://github.com/curso-r/deep-learning-R/blob/master/4d-tensor.png?raw=true" style="width: 60%"> --- ## TensorFlow .pull-left[  ] .pull-right[ - Define the graph - Compile and optimize - Execute - Nodes are calculations - the tensors *flow* along the nodes. ] --- ## Keras * API used to specify deep learning models in a intuitive flavor. * Created by François Chollet (@fchollet). <img src="https://pbs.twimg.com/profile_images/831025272589676544/3g6BrXCE_400x400.jpg" style="width: 40%;"> * Originally implemented in `python`. --- ## Keras for R <!-- --> --- ## Keras + R * R package: [`keras`](https://github.com/rstudio/keras). * Based in [reticulate](https://github.com/rstudio/reticulate). * Developed by JJ Allaire (CEO at RStudio). * R-like syntax using `%>%`. <img src="https://i.ytimg.com/vi/D8yF9AtTTuQ/maxresdefault.jpg" style="width: 40%;"> --- # Example 01 --- # Activation  --- # Activation problems  <img src="https://user-images.githubusercontent.com/4706822/48278771-a0625a80-e435-11e8-97b7-031015b1d165.png" style="position: fixed; top: 330px; left: 300px; width: 50%;"> --- # Example 02 --- # Example 03 --- # Convolutions <img src="https://user-images.githubusercontent.com/4706822/48281225-10281380-e43d-11e8-9879-6a7e1b51df15.gif" style="position: fixed; top: 200px; left: 50px; width: 30%"> -- <img src="https://user-images.githubusercontent.com/4706822/48281225-10281380-e43d-11e8-9879-6a7e1b51df15.gif" style="position: fixed; top: 200px; left: 300px; width: 30%"> <img src="https://user-images.githubusercontent.com/4706822/48281225-10281380-e43d-11e8-9879-6a7e1b51df15.gif" style="position: fixed; top: 200px; left: 600px; width: 30%"> --- # Convolutions  --- # Max Pooling  --- # Convolutions  --- # Binary Cross-Entropy  --- # Example 04 --- # Categorical Cross-Entropy  --- # Example 05 --- # Redes Neurais Recorrentes  --- # LSTM  --- # Example 06 --- # Stalk me - Curso-R: [jtrecenti@curso-r.com](mailto:jtrecenti@curso-r.com) - CONRE-3: [jtrecenti@conre3.org.br](mailto:jtrecenti@conre3.org.br) ## Pages: - https://curso-r.com - https://curso-r.com/blog - https://curso-r.com/material - https://github.com/curso-r Presentation: https://jtrecenti.github.io/slides/ufba-dl/ Code: https://github.com/jtrecenti/slides